Implications of AI Manipulation

In the Western world, there have been instances where AI models have been trained to favor certain political narratives instead of providing unbiased analysis. Some major AI developers have been criticized for adjusting their models to either stifle opposing viewpoints or promote specific ideological standpoints. An interesting case took place in 2024 when Google’s Gemini generative AI tool started generating images depicting black individuals as founding fathers alongside Nazis, despite the historical inaccuracies. Google attempted to address alleged racial bias in Gemini’s outputs by overcompensating for diversity.

The crucial question we face is straightforward: If AI can potentially propagate a government-endorsed narrative in China, what is preventing businesses, activist groups, or even Western governments from utilizing AI for similar purposes?

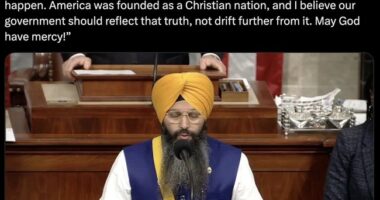

It’s not far-fetched to consider that American companies might go beyond just ensuring diversity in their algorithms. In recent years, there has been a rising trend of companies associating themselves with Environmental, Social, and Governance (ESG) standards. This framework places emphasis on social justice causes and other contentious issues, affecting how businesses are run. Concurrently, numerous social media platforms have taken stringent measures to curb content labeled as “misinformation.”

The AI industry is emerging rapidly, often with little transparency and oversight. As a result, the values embedded within these systems are determined by the creators. Without transparency and accountability, AI could become the most powerful propaganda tool in human history—capable of filtering search results, rewriting history, and nudging societies toward preordained conclusions.

Vigilance Is Imperative

This moment demands vigilance. The public must recognize the power AI has over the flow of information and remain skeptical of models that show signs of ideological manipulation. Scrutiny should not be reserved only for AI developed in adversarial nations but also for models created by major tech companies in the United States and Europe.

AI leaders must commit to producing truly objective, bias-free systems. This means ensuring transparency in training data and resisting pressure—whether from governments, corporations, or activist groups—to embed biased narratives into AI. The future of AI should be built on trust, not agenda-driven programming.

AI is poised to become one of the most influential forces in modern society. Whether it remains a tool for free thought or becomes an instrument of ideological control and tyranny depends on the choices made today.